Research Directions

We develop machine learning tools for behavioral and neural data analysis, and conversely try to learn from the brain to solve challenging machine learning problems.

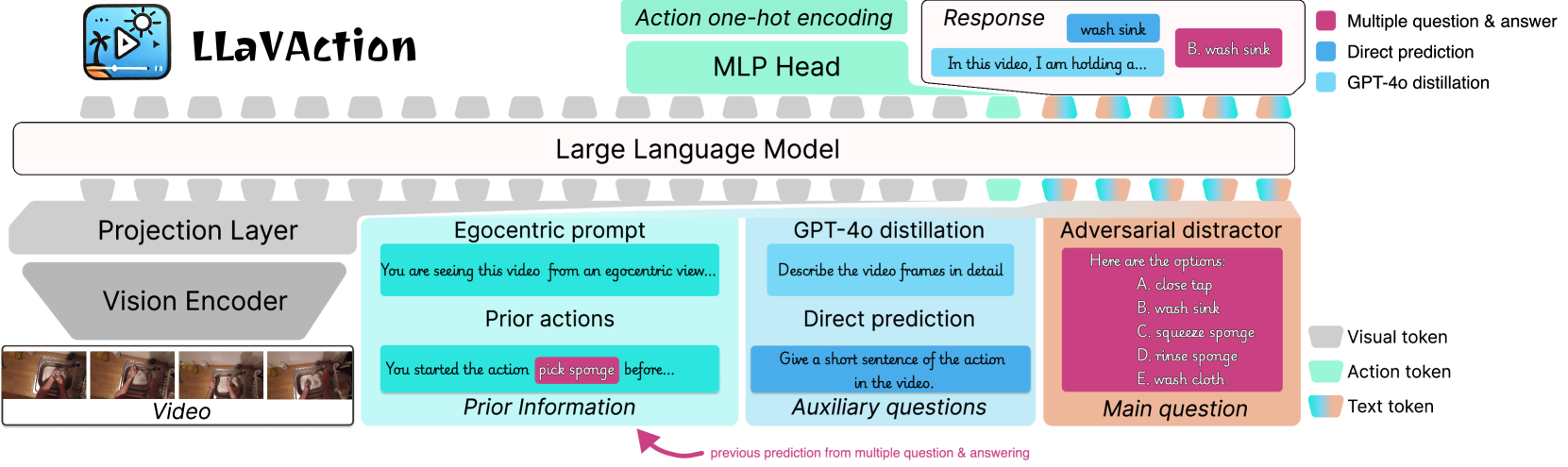

Machine Learning for Behavior Analysis

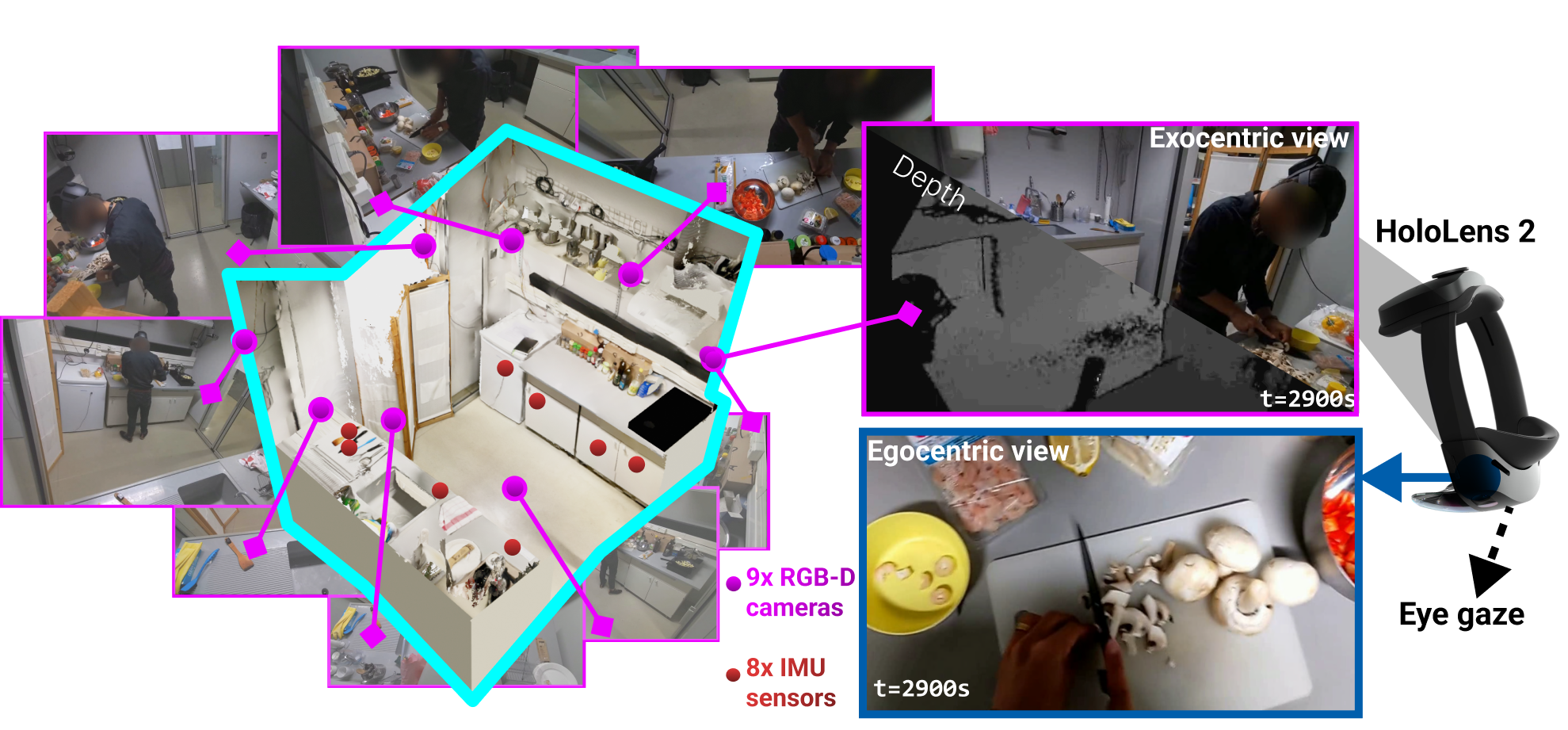

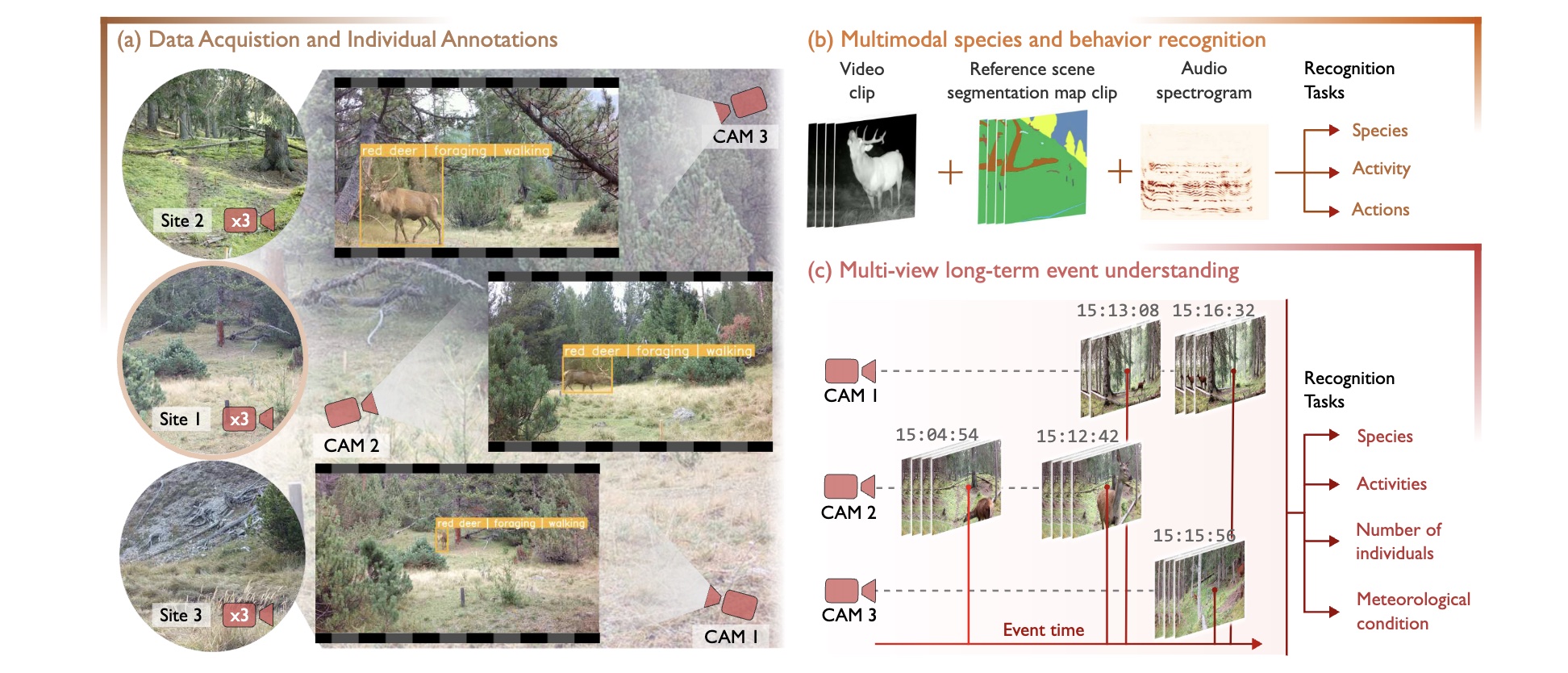

We strive to develop tools for the analysis of animal behavior. Behavior is a complex reflection of an animal's goals, state and character. Thus, accurately measuring behavior is crucial for advancing basic neuroscience, as well as the study of various neural and psychiatric disorders. However, measuring behavior (from video) is also a challenging computer vision and machine learning problem.

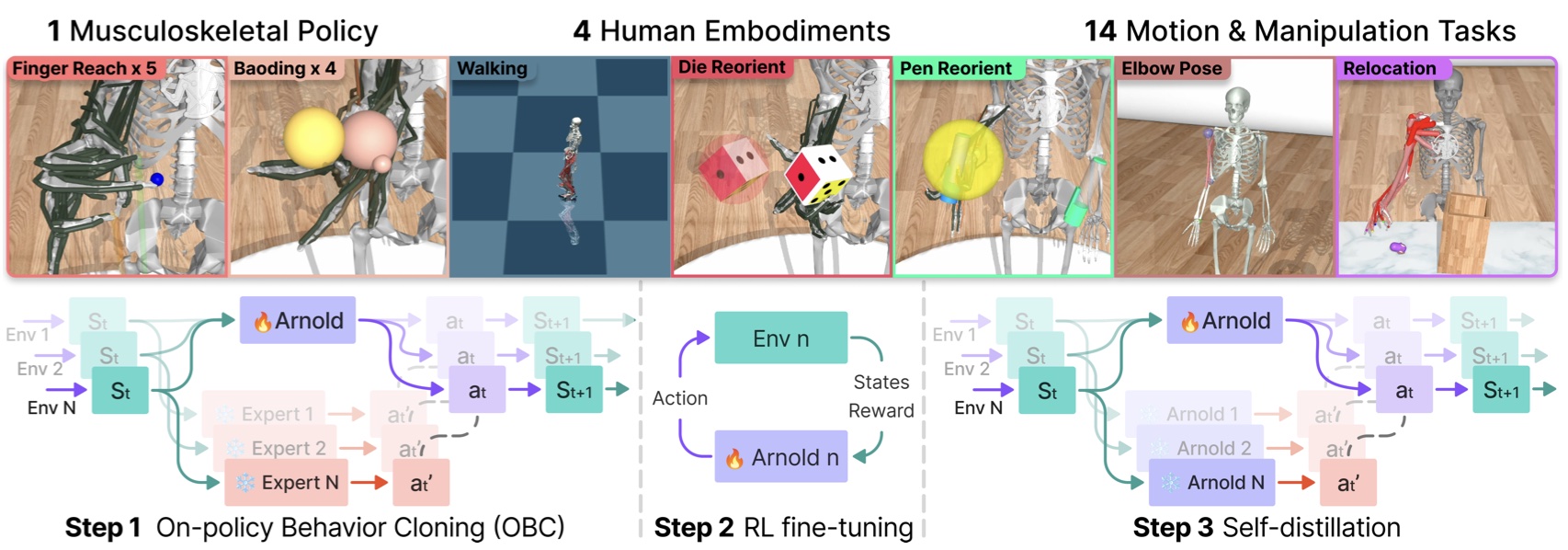

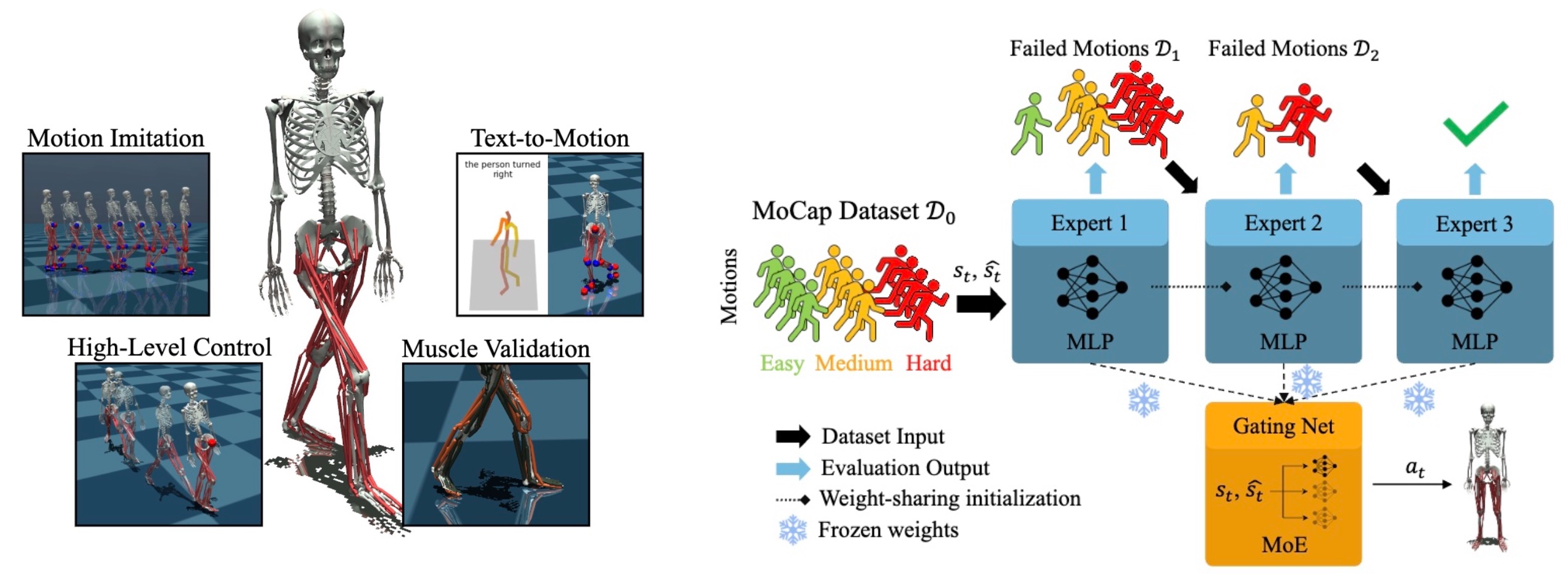

Brain-inspired Motor Skill Learning

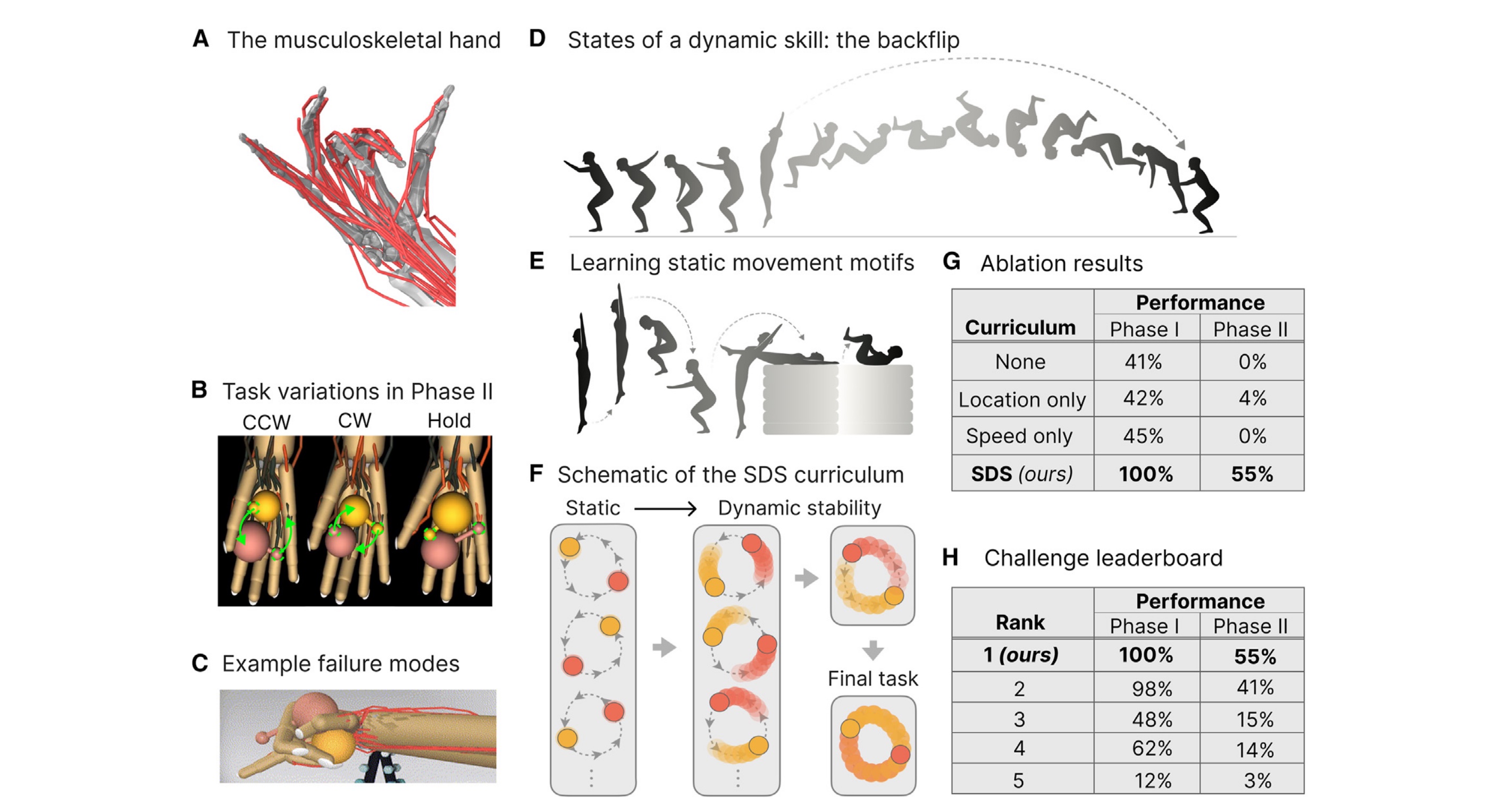

Watching any expert athlete, it is apparent that brains have mastered to elegantly control our bodies. This is an astonishing feat, especially considering the inherent challenges of slow hardware and the sensory and motor latencies that impede control. Understanding how the brain achieves skilled behavior is one of the core questions of neuroscience that we tackle through Modeling using Reinforcement Learning, and Control Theory.

Task-driven Models of Proprioception

We develop normative theories and models for sensorimotor transformations and learning. Work in the past decade has demonstrated that networks trained on object-recognition tasks provide excellent models for the visual system. Yet, for sensorimotor circuits this fruitful approach is less explored, perhaps due to the lack of datasets like ImageNet.

Latest Research

Breakthroughs and discoveries from our lab

AS Chiappa, B An, M Simos, C Li, A Mathis

S Ye*, H Qi*, A Mathis**, MW Mathis**

M Simos, AS Chiappa, A Mathis

E Kozlova, A Bonnetto, A Mathis

A Bonnetto*, H Qi*, F Leong, M Tashkovska, M Rad, S Shokur, F Hummel, S Micera, M Pollefeys, A Mathis

V Gabeff, H Qi, B Flaherty, G Sumbül, A Mathis*, D Tuia*

AS Chiappa, P Tano, N Patel, A Ingster, A Pouget, A Mathis

Open Science & Community Impact

We are passionate about open-source code and making our tools broadly accessible to the scientific community.

Join Our Team

We are actively looking for undergraduate, master's, and PhD students with interests in behavioral analysis and modeling sensorimotor learning. We also regularly recruit postdoctoral fellows.